#5-Docker: Lightweight Containerization

In this newsletter, we’ll explore the history of container technology, the specific innovations that powered Docker's meteoric rise, and the Linux fundamentals enabling its magic. We’ll explain what Docker images are, how they differ from virtual machines, and whether you need Kubernetes to use Docker effectively. By the end, you’ll understand why Docker has become the standard for packaging and distributing applications in the cloud.

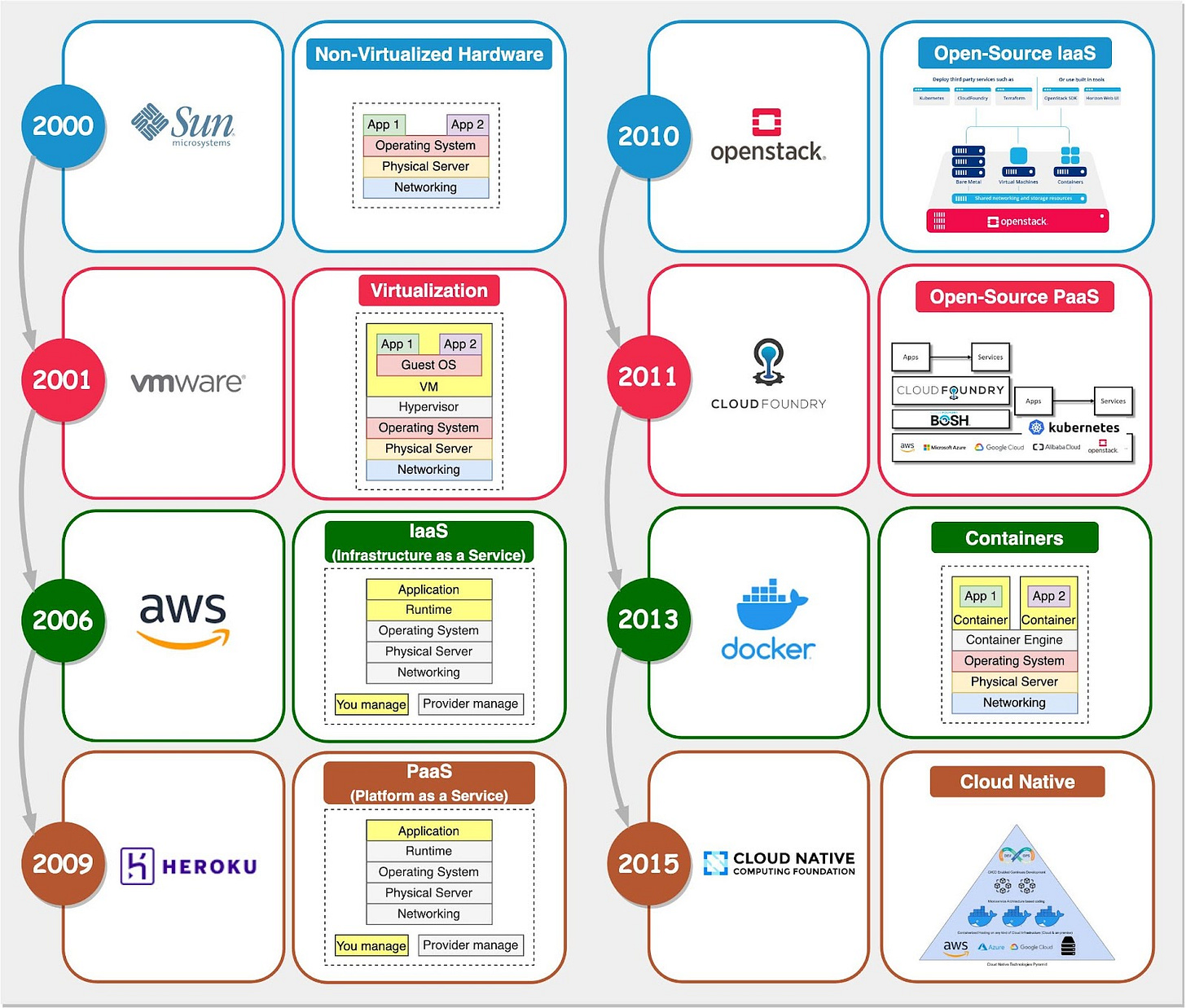

in the past, backend infrastructure evolved rapidly, as illustrated in the timeline below:

Lightweight Containerization

Container technology is often compared to virtual machines, but they use very different approaches.

A VM hypervisor emulates underlying server hardware such as CPU, memory, and disk, to allow multiple virtual machines to share the same physical resources. It installs guest operating systems on this virtualized hardware. Processes running on the guest OS can’t see the host hardware resources or other VMs.

In contrast, Docker containers share the host operating system kernel. The Docker engine does not virtualize OS resources. Instead, containers achieve isolation through Linux namespaces and control groups

Namespaces provide separation of processes, networking, mounts, and other resources. groups limit and meter usage of resources like CPU, memory, and disk I/O for containers. We’ll visit this in more depth later.

This makes containers more lightweight and portable than VMs. Multiple containers can share a host and its resources. They also start much faster since there is no bootup of a full VM OS.

Docker is not “lightweight virtualization” as some would describe it. It uses Linux primitives to isolate processes, not virtualize hardware like a hypervisor. This OS-level isolation is what enables lightweight Docker containers.

Application Packaging

Before Docker’s release in 2013, Cloud Foundry was a widely used open-source PaaS platform. Many companies adopted Cloud Foundry to build their own PaaS offerings.

Compared to IaaS, PaaS improves developer experience by handling deployment and application runtimes. Cloud Foundry provided these key advantages:

Avoid vendor lock-in - applications built on it were portable across PaaS implementations.

Support for diverse infrastructure environments and scaling needs.

Comprehensive support for major languages like Java, Ruby, and Javascript, and databases like MySQL and PostgreSQL.

A set of packaging and distribution tools for deploying applications

Cloud Foundry relied on Linux containers under the hood to provide isolated application sandbox environments. However, this core container technology powering Cloud Foundry was not exposed as a user-facing feature or highlighted as a key architectural component.

The companies offering Cloud Foundry PaaS solutions overlooked the potential of unlocking containers as a developer tool. They failed to recognize how containers could be transformed from an internal isolation mechanism to an externalized packaging format.

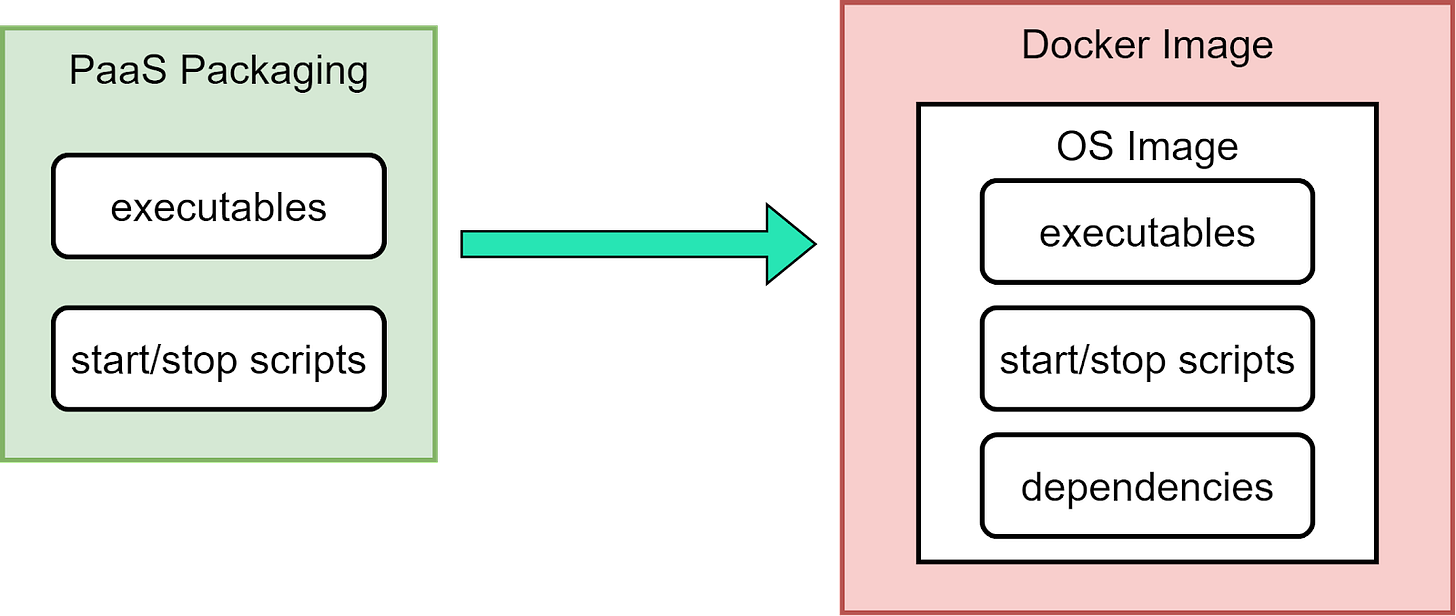

Docker became popular by solving two key PaaS packaging problems with container images:

Bundling the app, configs, dependencies, and OS into a single deployable image

Keeping the local development environment consistent with the cloud runtime environment

The diagram below shows a comparison.

How does Docker work?

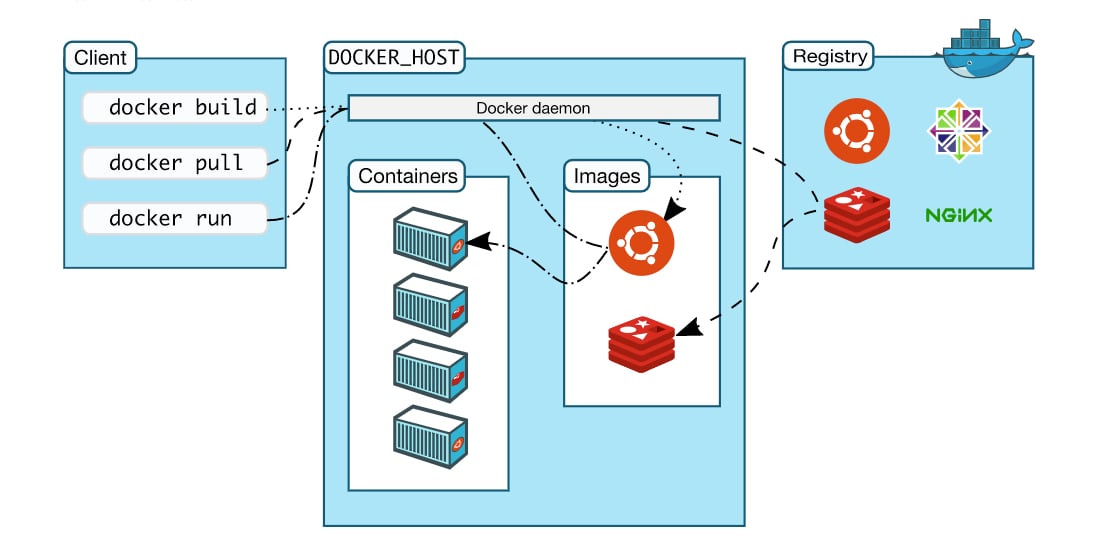

Docker provides lightweight virtualization on your desktop using containers. Here's what happens when you "docker build", "docker pull", "docker run"

1. The Docker Client talks to the Docker Daemon

2. Docker Daemon manages containers and images

3. Docker Registry stores images like on Docker Hub

When you "docker run" a container:

- Pull the image from the registry if not cached locally

- Create a new container

- Give it a filesystem

- Connect to network

- Start container

Docker uses containerization, not hardware virtualization. Containers share the host kernel so a full OS image isn't needed.

Docker isolates resources with groups and namespaces without duplication. Containers get their filesystem but don't duplicate the full OS.

The layered filesystems allow efficient use of storage and quick duplication of container environments.

Docker is a great packaging technology - bundling the app, libraries, dependencies - everything needed to run. This standardized packaging makes Docker images popular in the cloud, even when container runtimes like containers or CRI-O are used instead of Docker itself.

So Docker provides lightweight virtualization on desktops, while also being an excellent packaging technology for apps in the cloud.

Docker Swarm vs Kubernetes: A Practical Comparison

Docker Swarm is a container orchestration tool for managing and deploying applications using a containerization approach. It allows you to create a cluster of Docker nodes (known as a Swarm) and deploy services to the cluster. Docker Swarm is lightweight and straightforward, allowing you to containerize and deploy your software project in minutes.

To manage and deploy applications efficiently, Docker Swarm offers the following capabilities:

Cluster Management: You can create a cluster of nodes to manage the application containers. A node can be a manager or a worker (or both).

Service Abstraction: Docker Swarm introduces the "Service" component, which lets you set the network configurations, resource limits, and number of container replicas. By managing the services, Docker Swarm ensures the application's desired state is maintained,

Load Balancing: Swarm offers built-in load balancing, which allows services inside the cluster to interact without the need for you to define the load balancer file manually.

Rolling Back: Rolling back to a specific service to its previous version is fully supported in case of a failed deployment.

Fault Tolerance: Docker Swarm automatically checks for failures in nodes and containers to generate new ones to allow users to keep using the application without noticing any problems.

Scalability: With Docker Swarm, you have the flexibility to adjust the number of replicas for your containers easily. This allows you to scale your application up or down based on the changing demands of your workload.

Docker Swarm architecture

In summary, the Swarm cluster consists of several vital components that work together to manage and deploy containers efficiently:

Manager nodes are responsible for receiving client commands and assigning tasks to worker nodes. Inside each manager node, we have the Service Discovery and Scheduler component. The former is responsible for collecting information on the worker nodes, while the latter finds suitable worker nodes to assign tasks to.

Worker nodes are solely responsible for executing the tasks assigned by a manager node.

In the above diagram, the deployment steps typically work like this:

The client machines send commands to a manager node, let's say to deploy a new PostgreSQL database to the Swarm cluster.

The Swarm manager finds the appropriate worker node to assign tasks for creating the new deployment.

Finally, the worker node will create new containers for the PostgreSQL database and manage the containers' states.

Deploying the application with Docker Swarm

1. Create a Docker Swarm cluster

In a Docker Swarm cluster, you will have one or more manager nodes to distribute tasks to one or more worker nodes. To simplify this demonstration, you will only create the manager node responsible for deploying the services and handling the application workload.

To create a Docker Swarm cluster, run the following command:

$ docker swarm initYou should see the following output confirming that the current node is a manager and relevant instructions to add a worker node to the cluster:

Swarm initialized: current node (rpuk92y8wypwqwnv5kqzk5fik) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token <token> <ip_address>

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.2. Building the application Docker image

Inside the application directory, you have a Dockerfile which has the following contents:

FROM golang:1.20

WORKDIR /app

COPY go.mod go.sum ./

RUN go mod download

COPY main.go ./

COPY config/db.go ./config/db.go

COPY handler/handlers.go ./handler/handlers.go

RUN CGO_ENABLED=0 GOOS=linux go build -o /out/main ./

EXPOSE 8081

# Run

ENTRYPOINT ["/out/main"]These commands inside the Dockerfile instructs Docker to pull the Go version 1.20 image, then copy the files from our project directory into the Docker image and build an application executable named main in the /out directory. It also specifies that the Docker container will listen on port 8081 and that the /out/main binary is executed when the container is started.

To build the application image, run the following command. Remember to replace the <username> placeholder with your DockerHub username:

docker build -t <username>/blog .Now that you have successfully built the application image, log into your DockerHub account from the current session so that you can push the image to your account:

docker loginOnce login is successful, run the following command to push the image to DockerHub:

docker push <username>/blog3. Deploying the application to the Swarm cluster

In this section, you will deploy the Go application and the PostgreSQL database to the Swarm cluster. Within the swarm directory, you have the two following files:

initdb.sqlis for initializing the database state by creating a new table named "posts" when deploying the PostgreSQL database to the Swarm clusterdocker-compose.ymlis for creating the PostgreSQL database and the application services to the Swarm cluster

Open the docker-compose.yml file in your text editor and update the <username> placeholder to your Docker username:

swarm/docker-compose.yml

# Use postgres/example user/password credentials

version: '3.6'

services:

db:

image: postgres

environment:

- POSTGRES_DB=blog_db

- POSTGRES_USER=blog_user

- POSTGRES_PASSWORD=blog_password

ports:

- '5432:5432'

volumes:

- ./initdb.sql:/docker-entrypoint-initdb.d/initdb.sql

networks:

- blog-network

blog:

image: <username>/blog

environment: - POSTGRES_DB=blog_db - POSTGRES_USER=blog_user - POSTGRES_PASSWORD=blog_password - POSTGRES_HOST=db:5432 ports: - '8081:8081' volumes: - .:/app networks: - blog-network networks: blog-network: name: blog-network

This file instructs Docker Swarm to create three services called db, blog, and networks:

The

dbservice is for creating a PostgreSQL database using the specified configuration. Its state is initialized using theinitdb.sqlfile, and it uses theblog-networknetwork.The

blogservice creates the blog application using the Docker image you previously pushed to DockerHub. You also need to provide environment variables for this service so that the application can access the PostgreSQL database. This service will also use theblog-networknetwork allowing interaction with thedbservice through the host URL:db:5432.The

networksservice creates a network namedblog-networkso the two services can communicate via this network.

To deploy these three services to the running Swarm cluster, run the following commands in turn:

cd swarm

docker stack deploy --compose-file docker-compose.yml blogapp

Output

Creating network blog-network

Creating service blogapp_db

Creating service blogapp_blog

Afterward, run the following command to verify that the services are running:

docker stack services blogapp

You should see the following results:

Output

ID NAME MODE REPLICAS IMAGE PORTS

uptqkx630zpy blogapp_blog replicated 1/1 username/blog:latest *:8081->8081/tcp

jgb19lcx32y6 blogapp_db replicated 1/1 postgres:latest *:5432->5432/tcpWhat is Kubernetes?

Kubernetes is a container orchestration tool for managing and deploying containerized applications. It supports a wide variety of container runtimes, including Docker, Containers, Mirantis, and others, but the most commonly used runtime is Docker. Kubernetes is feature-rich and highly configurable, which makes it ideal for deploying complex applications, but it requires a steep learning curve.

Some of the key features of Kubernetes are listed below:

Container Orchestration with Pods: A pod is the smallest and simplest deployment unit. Kubernetes allows you to deploy applications by creating and managing one or more containers in pods.

Service Discovery: Kubernetes allows containers to interact with each other easily within the same cluster.

Load Balancing: Kubernetes' load balancer allows access to a group of pods via an external network. Clients from outside the Kubernetes cluster can access the pods running inside the Kubernetes cluster via the balancer's external IP. If any of the pods in the group goes down, client requests will automatically be forwarded to other pods, allowing the deployed applications to be highly available.

Automatic Scaling: You can scale-in or scale-out your application by adjusting number of deployed containers based on resource utilization or custom-defined metrics.

Self-Healing: If one of your pods goes down, Kubernetes will try to recreate that pod automatically so that normal operation is restored.

Persistent Storage: It provides support for various storage options, allowing you to persist data beyond the lifecycle of individual pods.

Configuration and Secrets Management: Kubernetes allows you to store and manage configuration data and secrets securely, decoupling sensitive information from the application code.

Rolling back: If there's a problem with your deployment, you can easily transition to a previous version of the application.

Resource Management: With Kubernetes, you can flexibly set the resource constraints when deploying your application.

Extensibility: Kubernetes has a vast ecosystem and can be extended with numerous plugins and tools for added functionality. You can also interact with Kubernetes components using Kubernetes APIs to deploy your application programmatically.

Kubernetes architecture

Article

Quotes

“If deploying software is hard, time-consuming, and requires resources from another team, then developers will often build everything into the existing application in order to avoid suffering the new deployment penalty.”

― Karl Matthias, Docker: Up & Running: Shipping Reliable Containers in Production